Mixed Precision

일반적인 neural network에서는 32-bit floating point(FP32) precision을 이용하여 학습을 시키는데, 최신 하드웨어에서는 lower precision(FP16) 계산이 지원되면서 속도에서 이점을 얻을 수 있다. 하지만 FP16으로 precision을 줄이면 수를 표현하는 범위가 줄어들어 학습 성능이 저하될 수 있다.

Mixed Precision은 딥러닝 모델 학습 과정에서 부동소수점 연산의 정밀도를 혼합하여 사용하는 기술로, 학습 속도를 높이고 메모리 사용량을 줄이는 데 도움을 준다. Mixed Precision은 대개 FP32(32비트 부동소수점)와 FP16(16비트 부동소수점)을 조합하여 사용하며, 가중치와 그래디언트는 FP16로 저장하고 연산은 FP16로 수행하면서 일부 연산에서는 FP32로 전환하여 오버플로우 및 언더플로우를 방지한다.

Mixed Precision을 사용하면 일반적으로 메모리를 절약하고 학습 속도를 향상시키면서 정확도는 어느 정도 유지할 수 있기에 모델 학습 시 자주 사용된다.

AUTOMATIC MIXED PRECISION PACKAGE - TORCH.AMP

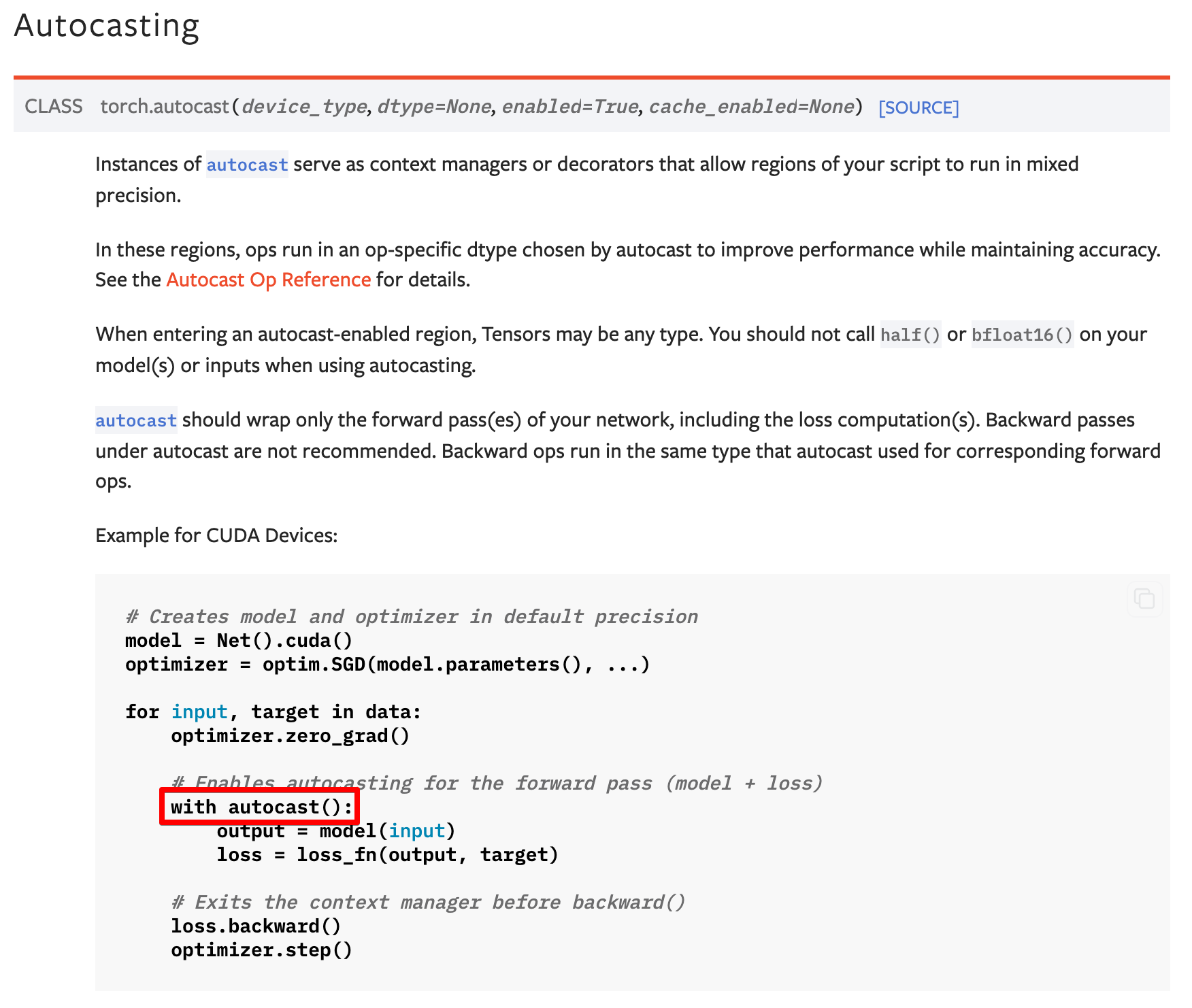

torch.amp의 torch.autocast를 사용하면 pytorch 모델에 mixed precision을 간단하게 적용할 수 있다.

Docs url :https://pytorch.org/docs/stable/amp.html

위 설명에서, ops는 정확도를 유지하면서 성능을 향상시키기 위해 autocast에서 선택한 특정 op dtype에서만 실행된다고 나와 있다. Autocast Op Reference를 보면 자세한 설명을 확인할 수 있다.

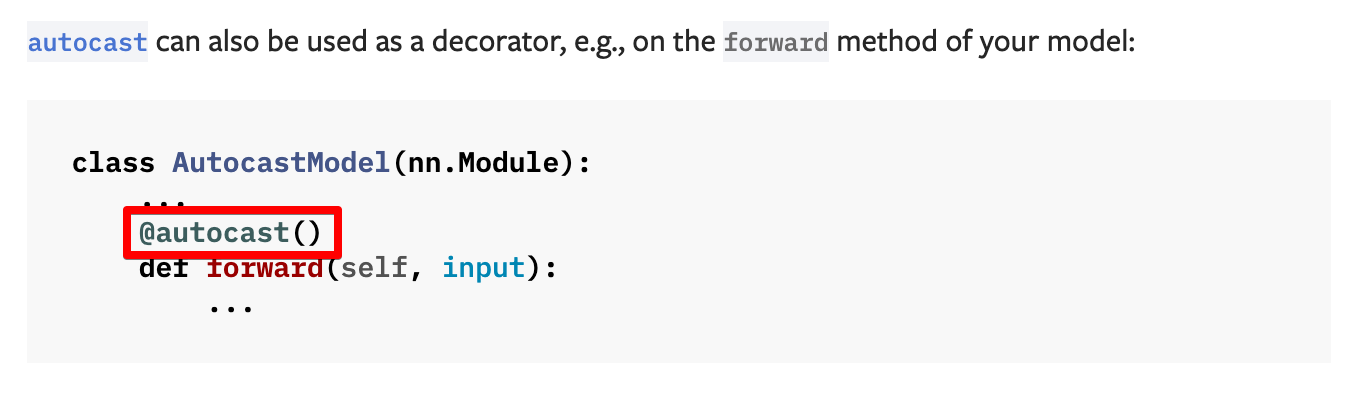

또한 autocast는 아래처럼 forward 메서드의 데코레이터로 사용할 수도 있습니다.

torch.Autocast() 사용 예시

# 학습

import torch

from torch import nn

from torch.cuda.amp import autocast, GradScaler

model = YourModel()

optimizer = YourOptimizer(model.parameters(), lr=learning_rate)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = model.to(device)

scaler = GradScaler()

for epoch in range(num_epochs):

for inputs, targets in dataloader:

inputs, targets = inputs.to(device), targets.to(device)

# autocast를 사용하여 Mixed Precision 활성화

with autocast():

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, targets)

# GradScaler를 사용하여 그래디언트 스케일링

scaler.scale(loss).backward()

scaler.step(optimizer)

scaler.update()모델 학습 시에 mixed precision을 사용하기 위해서는 학습 과정에서 위와 같이 with autocat()를 사용해야 한다

# 인퍼런스

import torch

from torch.cuda.amp import autocast

model = YourModel()

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = model.to(device)

model.eval() # 모델을 evaluation 모드로 설정

with torch.no_grad():

with autocast():

inputs = inputs.to(device)

outputs = model(inputs)모델 인퍼런스 시 mixed precision을 사용하기 위해서는 위 코드처럼 인퍼런스 코드에서 with autocast()를 사용해도 되고,

import torch

from torch import nn

from torch.cuda.amp import autocast

class YourModel(nn.Module):

def __init__(self):

super(YourModel, self).__init__()

# 모델의 레이어들을 정의합니다.

def forward(self, inputs):

with autocast():

# forward 메서드 내의 연산을 Mixed Precision으로 수행합니다.

x = self.layer1(inputs)

x = self.layer2(x)

x = self.layer3(x)

# ...

return x위와 같이 모델 forward 메서드에서 with autocast()를 사용해도 된다.

'💻 Programming > AI & ML' 카테고리의 다른 글

| [HuggingFace] Swin Transformer 이미지 분류 모델 학습 튜토리얼 (0) | 2023.01.11 |

|---|---|

| [ONNX] pytorch 모델을 ONNX로 변환하고 실행하기 (0) | 2022.12.21 |

| [pytorch] pytorch 모델 로드 중 Missing key(s) in state_dict 에러 (0) | 2022.12.15 |

| [pytorch] COCO Data Format 전용 Custom Dataset 생성 (1) | 2022.06.04 |

| [pytorch] model 에 접근하기, 특정 layer 변경하기 (0) | 2022.01.05 |