오늘은 Video Generation 분야에서 유명한 HunyanVideo 에 대해 살펴보고자 한다.

1. 개요

HunyuanVideo는 대규모 텍스트-비디오 생성 오픈소스 모델로, 고해상도·장면 일관성·모션 자연스러움·프롬프트 충실도 등을 동시에 달성하기 위해 설계된 멀티스케일 비디오 생성 시스템이다. 논문에서는 다음 세 가지를 핵심 목표로 삼는다.

- 고해상도·고품질 비디오 생성 (1080p+)

- 장면·시간적 일관성 강화

- 복잡한 장면 구성 및 상세 묘사 능력 강화

이를 위해 이미지 기반 diffusion 모델을 확장하는 방식이 아니라, 비디오를 직접 다루는 전용 Temporal-DiT 구조를 채택한다.

2. Data Pre-processing

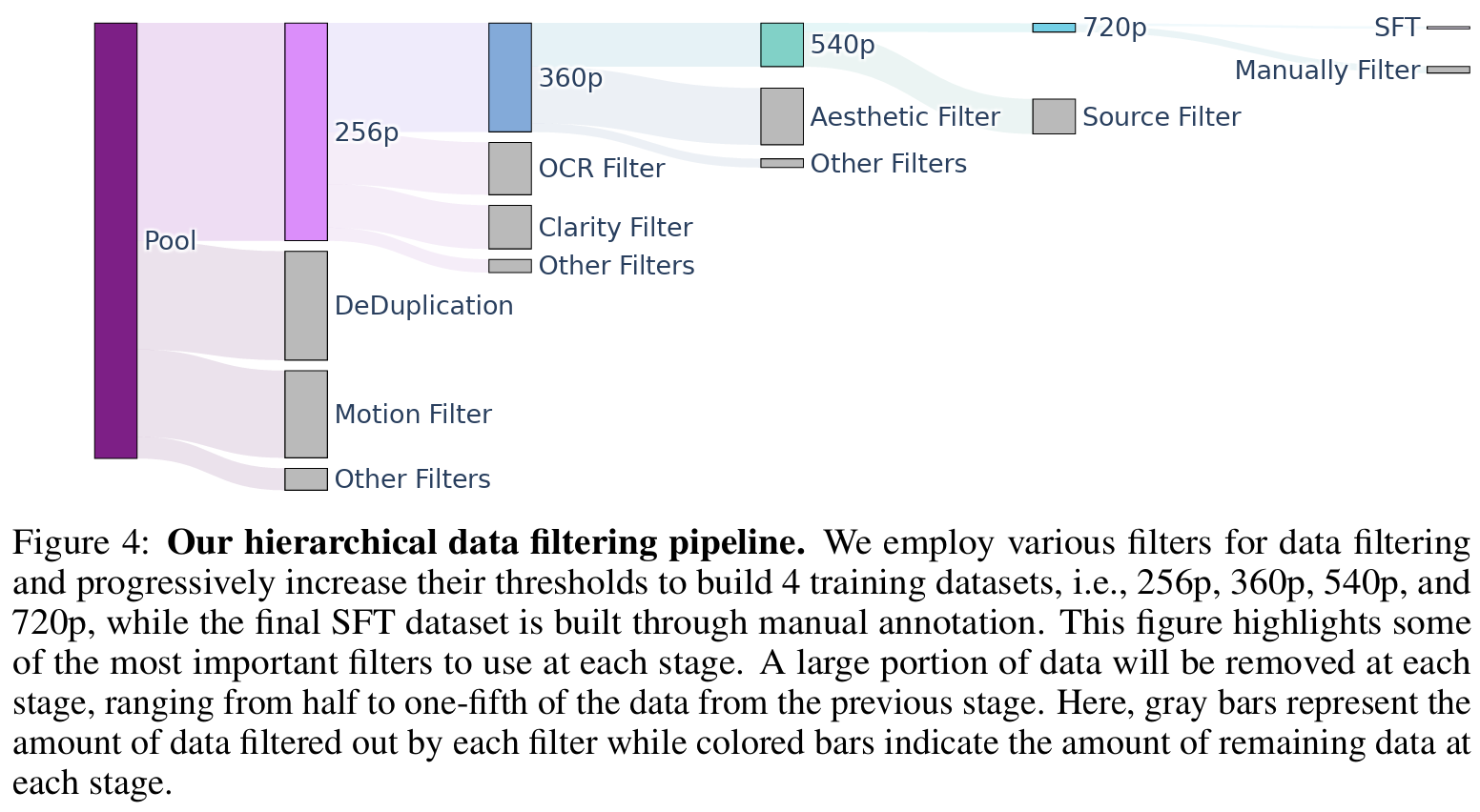

HunyuanVideo는 대규모·고품질 비디오 생성 모델을 만들기 위해 비디오 전처리 파이프라인을 매우 까다롭게 설계했다. 단순히 웹에서 비디오를 수집해 학습하는 방식이 아니라, 이미지 생성 모델에 준하는 정교한 데이터 정제 단계를 수행한다. 전처리 단계는 크게 5단계로 구성된다.

2.1 클립 분할 (Clip Segmentation)

- 웹 비디오를 일정 길이(예: 2~8초) 단위로 잘라 clip을 만든다.

- 너무 짧거나(아주 짧은 정적 장면), 너무 긴 영상은 제외한다.

2.2 영상 품질 필터링 (Quality Filtering)

다양한 VLM 기반 필터를 사용하여 학습에 불필요한 클립을 제거한다.

- 해상도 낮음, 노이즈 심함

- 지나치게 흔들리는 영상

- 화면 거의 정적 (motionless)

- 정보가 없는 배경 영상

- 자막/워터마크가 과도하게 포함된 경우

전처리 필터는 Qwen-VL, CLIP scoring, motion scoring 등을 조합해 구성된다.

2.3 모션 분석 (Motion & Dynamics Filtering)

비디오 생성 모델은 동작 정보가 핵심이므로, 너무 정적인 비디오는 학습에 도움이 되지 않는다.

- Optical Flow 기반 motion intensity 측정

- 너무 정적인 영상은 제외

- 너무 빠르게 움직여 모션 blur가 심한 영상도 제외

2.4 장면 안정성 분석 (Scene Stability)

비디오 내부에서 장면이 과도하게 튀는 경우(씬 컷, 점프컷)는 시간 일관성 학습에 방해된다.

- Shot detection 알고리즘으로 컷 위치 탐지

- 하나의 clip 안에 컷이 여러 번 발생하면 해당 clip 제거

2.5 텍스트 Caption 생성 (MLLM Captioning)

이 단계가 매우 중요하다. 비디오 생성 모델은 정확한 텍스트 조건이 있어야 학습이 안정화된다.

- Qwen2-VL 기반 MLLM으로 상세 비디오 캡션 생성

- 장면 정보 + 객체 + 동작 + 카메라 시점까지 모두 기술하도록 시스템 프롬프트 사용

"A brown dog running along a beach while the camera slowly follows from behind. Waves move softly in the background."

이렇게 고품질 캡션을 생성하여 프롬프트 충실도를 극대화한다.

3. 모델 아키텍처

HunyuanVideo의 모델 아키텍처는 크게 세 부분으로 나뉜다.

- Causal 3D VAE: 비디오/이미지를 시공간(latent) 공간으로 압축하는 모듈

- Diffusion Backbone (Video DiT): 3D latent 상에서 비디오를 생성하는 Transformer

- Text Encoder(MLLM + CLIP): 풍부한 텍스트 조건을 생성하는 모듈

이 세 모듈이 합쳐져 "텍스트 → 3D latent 비디오 → 픽셀 비디오"로 이어지는 end-to-end 생성 파이프라인을 구성한다.

3.1 Causal 3D VAE

다른 비디오 모델들은 종종 이미지 VAE를 먼저 학습한 뒤, 시간 축을 얹는 방식을 사용하지만, HunyuanVideo는 아예 비디오 전용 3D VAE를 처음부터 따로 학습하는 방식을 채택한다. 이 모듈의 목적은 다음과 같다.

- 고해상도 비디오를 시공간을 모두 압축한 latent 공간으로 변환

- 이후 Diffusion Transformer가 처리해야 할 토큰 수를 크게 줄여 학습·추론을 가능하게 함

압축 방식

입력 비디오의 크기를 (T+1)×3×H×W라고 하면, 3D VAE는 CausalConv3D를 반복적으로 적용해 다음과 같은 latent를 출력한다.

- 출력 크기: ((T/ct)+1) × C × (H/cs) × (W/cs)

- 논문 기본 설정: ct = 4, cs = 8, C = 16

즉, 시간축은 4배, 공간축은 8배 줄이고 채널을 16으로 확장한 형태의 시공간 latent로 변환하는 구조이다. 이로 인해 1080p 비디오라도 latent 상에서는 훨씬 작은 크기로 처리할 수 있다.

CausalConv3D를 쓰는 이유

- 비디오의 시간 순서를 보존하면서도 효율적으로 인코딩하기 위함이다.

- Causal 구조를 사용해 "현재 프레임은 과거 프레임만 참고"하도록 설계하여, 향후 autoregressive 확장이나 온라인 처리에도 유리하다.

학습 시 특징

- 이미지와 비디오를 4:1 비율로 섞어 학습하여, 정적 이미지와 동적 비디오 모두 잘 재구성하도록 한다.

- L1, KL loss뿐 아니라 LPIPS(perceptual loss), GAN loss를 함께 사용하여 시각적 품질을 끌어올린다.

- 고속 모션 비디오 재구성을 위해 프레임 간 간격을 랜덤 샘플링하는 전략을 사용한다.

이렇게 학습된 3D VAE는 이후 Diffusion 단계에서 "입력/출력 비디오를 오갈 수 있는 압축 표현 공간"으로 사용된다.

3.2 Unified Image & Video Diffusion Backbone (Video DiT)

HunyuanVideo의 핵심은 이미지와 비디오를 하나의 통합 Transformer(DiT)로 처리하는 것이다. 논문에서는 이를 "Unified Image and Video Generative Architecture"라고 부른다.

입력 구성

- 비디오/이미지 latent

- 3D VAE를 거친 latent: T×C×H×W

- 이미지는 "프레임이 1개인 비디오"로 취급하여 동일한 포맷으로 처리한다.

- 텍스트 조건(hidden states)

- MLLM(Hunyuan-Large 계열)로 텍스트를 인코딩한 토큰 시퀀스

- CLIP text encoder에서 추출한 global text embedding (마지막 토큰)도 함께 사용하여 전역적인 의미를 보강한다.

- 노이즈 및 시간 스텝 정보

- Rectified Flow 기반 diffusion이므로 time step t에 해당하는 조건이 포함된다.

3D Patchification

비디오 latent는 3D Conv(커널 크기 kt×kh×kw)를 통해 시공간 패치 토큰으로 변환된다.

- 토큰 개수: (T/kt) × (H/kh) × (W/kw)

- 각 토큰은 (kt×kh×kw×C) 차원의 벡터로 flatten된다.

이렇게 되면 최종적으로 "비디오 전체가 1D 토큰 시퀀스"로 펼쳐져 Transformer에 입력된다.

3.3 Full Spatio-temporal Attention + Dual/Single Stream 구조

기존 비디오 Diffusion 모델(Open-Sora, Imagen Video, MagicVideo 등)은 주로 다음과 같은 구조를 사용해왔다.

- 2D 공간 U-Net + 1D Temporal block

- 또는 공간/시간을 분리한 factorized attention (2D + 1D)

이 방식은 연산량을 줄이는 장점은 있지만, 공간·시간 상호작용을 충분히 모델링하기 어렵고, 장면·카메라 모션 일관성이 떨어지는 문제가 있다.

HunyuanVideo는 FLUX에서 사용한 것과 유사한 Dual-flow Attention 블록을 비디오 영역으로 확장하고, 공간·시간을 완전히 통합한 Full Attention Transformer를 사용한다.

Dual-stream → Single-stream

- Dual-stream 단계

- 영상 토큰과 텍스트 토큰을 서로 다른 스트림에서 각각 처리

- 각 모달리티가 자기 표현을 충분히 학습하도록 돕는 단계

- Single-stream 단계

- 이후 두 스트림의 토큰을 concat하여 하나의 Transformer에 넣어 멀티모달 joint attention을 수행

- 이 단계에서 텍스트 조건과 비디오 latent가 깊게 융합

이 구조 덕분에, 초기에는 비전-텍스트 표현이 서로 간섭 없이 안정적으로 학습되고, 후반에는 복잡한 멀티모달 상호작용을 포착할 수 있다.

Full Spatio-temporal Attention의 특징

- 토큰 간 self-attention이 시간·공간을 가리지 않고 전 범위에서 계산된다.

- 특정 프레임의 객체는 다른 프레임의 같은 객체와 직접 attention을 주고받을 수 있다.

- 결과적으로 대상 형태 유지, 모션 일관성, 카메라 움직임 표현이 이전 2D+1D 구조보다 우수하다.

3.4 3D RoPE: 시간·공간을 동시에 인코딩하는 포지션 임베딩

비디오는 시간 T, 세로 H, 가로 W라는 세 차원의 위치 정보를 모두 필요로 한다. HunyuanVideo는 이를 위해 3D 확장 RoPE(Rotary Position Embedding)을 사용한다.

- RoPE를 시간 T, 높이 H, 너비 W 축에 대해 각각 계산한다.

- query/key 채널을 (dt, dh, dw) 세 덩어리로 나눈 뒤, 각 덩어리에 대응하는 축의 RoPE를 곱한다.

- 이후 다시 concat하여 최종 query/key를 구성하고 attention을 계산한다.

이 방식은 다음과 같은 장점을 제공한다.

- 다양한 해상도·비율·길이의 비디오를 하나의 모델로 처리 가능

- 시간 축 extrapolation 능력이 향상되어 더 긴 비디오에도 일반화 가능

- 공간·시간 관계를 더 자연스럽게 학습

3.5 텍스트 인코더: MLLM + Bidirectional Refiner + CLIP

HunyuanVideo는 단순한 CLIP text encoder나 T5 계열이 아니라, visual instruction 학습이 끝난 멀티모달 LLM(MLLM)을 텍스트 인코더로 사용한다. 이 선택의 이유는 다음과 같다.

- MLLM은 시각적 문맥에 맞춘 세밀한 설명 능력을 가지고 있어, 비디오 프롬프트에 자주 등장하는 카메라 샷, 장면 전환, 분위기 등을 풍부하게 표현할 수 있다.

- 시스템 프롬프트를 통해 모델 친화적인 prompt 스타일로 유도할 수 있어, diffusion backbone이 이해하기 쉬운 텍스트 임베딩을 제공한다.

- causal attention 기반이기 때문에 autoregressive 프롬프트 처리와 잘 맞는다.

단, diffusion 모델 입장에서는 양방향 정보를 가진 텍스트 representation이 더 유리하기 때문에, 논문에서는 [Token Refiner] 블록을 추가해 MLLM의 causal feature를 bidirectional 형태로 다시 정제한다. 여기에 CLIP-Large text의 마지막 토큰을 global guidance로 추가하여, 세밀한 MLLM feature와 전역적인 CLIP feature를 함께 사용하는 구조이다.

3.6 다른 비디오 생성 모델과의 차이점 정리

정리하면, HunyuanVideo의 아키텍처는 기존 open-source 비디오 모델들과 다음과 같은 차별점을 가진다.

- 비디오 전용 3D VAE를 처음부터 학습한다.

- 많은 모델이 이미지 VAE를 재활용하는 것과 달리, 비디오에 최적화된 CausalConv3D VAE를 사용하여 고해상도·고프레임 비디오를 직접 latent 공간에서 다룬다.

- Full Spatiotemporal Attention을 사용하는 통합 Transformer이다.

- 2D + 1D factorized attention 대신, 시간·공간을 한 번에 다루는 full attention을 통해 더 강한 모션·장면 일관성을 얻는다.

- Dual-stream → Single-stream 구조로 멀티모달 융합을 수행한다.

- 초기에는 텍스트/비전 표현을 분리해 안정적으로 학습하고, 후반에는 하나의 스트림에서 깊게 융합하는 설계이다.

- 텍스트 인코더로 MLLM을 사용한다.

- CLIP/T5 기반보다 세밀한 장면·카메라·스타일 묘사를 잘 반영하며, token refiner + CLIP global feature로 보완한다.

이러한 설계 덕분에 HunyuanVideo는 이미지와 비디오를 모두 다룰 수 있는 unified generative backbone이면서, 동시에 고해상도·장시간·고품질 비디오 생성에 최적화된 아키텍처라고 볼 수 있다.

3.7 Model Scaling

Figure 10은 DiT-T2X 모델 패밀리(이미지·비디오 생성 모델)의 Loss vs Compute(FLOPs) 관계를 기반으로 두 가지 주요 scaling 법칙을 도출한다.

(1) Compute C ↔ Model Parameters N scaling law

(2) Compute C ↔ Dataset Tokens D scaling law

즉, 대규모 비디오 생성 모델이 scale up 할수록 더 성능이 좋아진다는 것을 보여주고, 최적 모델과 데이터 크기를 수치적으로 결정할 수 있다는 것을 뜻하기도 한다.

4. 학습 방법

HunyuanVideo는 대규모 영상 데이터 학습을 위한 특별한 전략을 사용한다.

4.1 Multi-Stage Training

- Stage 1: 이미지 사전학습(Image Pretraining)

- 2D 이미지 데이터를 이용해 공간적 이해력 확보

- Image-DiT로 초반 안정화

- Stage 2: 저해상도 비디오 학습(Low-Res Video)

- 256p/360p short clip 위주 학습

- Temporal attention 안정화

- Stage 3: 고해상도 비디오 학습(High-Res Video)

- 1080p·4K까지 확장

- Long-range temporal memory 학습

이 3단계 구조는 compute 효율성과 비디오 품질을 동시에 달성하기 위해 필요하다.

4.2 Balanced Training

비디오 프롬프트는 이미지보다 훨씬 복잡하므로, 텍스트 이해 능력을 강화하기 위해 LLaMA 기반 텍스트 인코더를 특별히 튜닝한다.

텍스트 퀄리티가 낮으면 다음 문제가 생긴다.

- camera instruct가 잘 안 지켜짐

- 동작 묘사 누락

- 캐릭터 consistency 저하

이 문제를 해결하기 위해 텍스트 파트와 비디오 파트를 별도로 학습하면서도 cross-attention에서 균형이 맞도록 학습률 스케줄을 조정한다.

4.3 Long-Video Training

일반 비디오 모델은 2~5초 길이에 국한되지만, HunyuanVideo는 10~30초 비디오도 처리한다.

이를 위해 아래와 같은 기법을 사용한다.

- long-context temporal attention

- video chunking

- temporal hierarchical encoding

5. 샘플링 방식

HunyuanVideo는 Rectified Flow 기반의 sampling을 사용하며, 다음과 같은 방식으로 비디오를 생성한다.

- Text Encoder로 prompt 임베딩 생성

- Noise 비디오 latent 준비

- Video-DiT가 iterative denoising 수행

- 완성된 latent를 Video-VAE Decoder로 복원

- 최종 비디오 생성

프레임 단위가 아닌 spatiotemporal latent 단위로 샘플링하기 때문에 모션 일관성이 뛰어나다.

6. 실험 결과

HunyuanVideo는 다양한 벤치마크에서 높은 성능을 기록한다.

- 고해상도 품질(1080p+) 우수

- 캐릭터 일관성, 카메라 모션 일관성 향상

- 복잡한 장면에서도 artifact 감소

- 장시간 비디오 생성 가능

실제 샘플에서도 안정적인 모션 흐름과 디테일 재현력이 두드러진다.

HunyuanVideo는 대규모 비디오 생성 모델이 어떻게 설계되고, 어떤 방식으로 안정적인 scaling을 달성할 수 있는지를 명확하게 보여주는 대표적 연구이다. 3D VAE·Full Spatiotemporal Attention·MLLM 기반 텍스트 조건 등 설계 전반이 유기적으로 결합되어 고해상도·장시간·일관성 있는 비디오 생성이 가능해졌다. 이를 통해 비디오 생성 모델 역시 이미지·언어 모델처럼 본격적인 파운데이션 모델로 확장될 수 있음을 입증한 의미 있는 사례라 할 수 있다.