본 논문은 NeurIPS 2021 에 공개되었고, 심플하고 강력한 semantic segmentation task 용 Transformer 인 SegFormer 를 제안하는 논문입니다.

Abstract

본 논문에서는 효율적인 Segmentation task 수행을 위한 간단하고 효율적이면서 강력한 semantic segmentation 프레임워크인 SegFormer 를 제안합니다. SegFormer 는 1) multi-scale feature 를 추출하는 새로운 hierarchically structured Transformer encoder 로 구성되고, positional encoding이 필요하지 않기 때문에 테스트 이미지의 해상도가 학습 이미지의 해상도와 다를 때 성능이 저하되는 positional code의 interpolation을 피할 수 있습니다. 2) 또한 SegFormer 는 복잡하지 않은 간단한 MLP 구조의 decoder를 사용하는데, 제안된 MLP decoder 는 서로 다른 계층의 정보를 집계하여 강력한 representation 을 렌더링하기 위해 local attention 과 global attention 을 결합합니다.

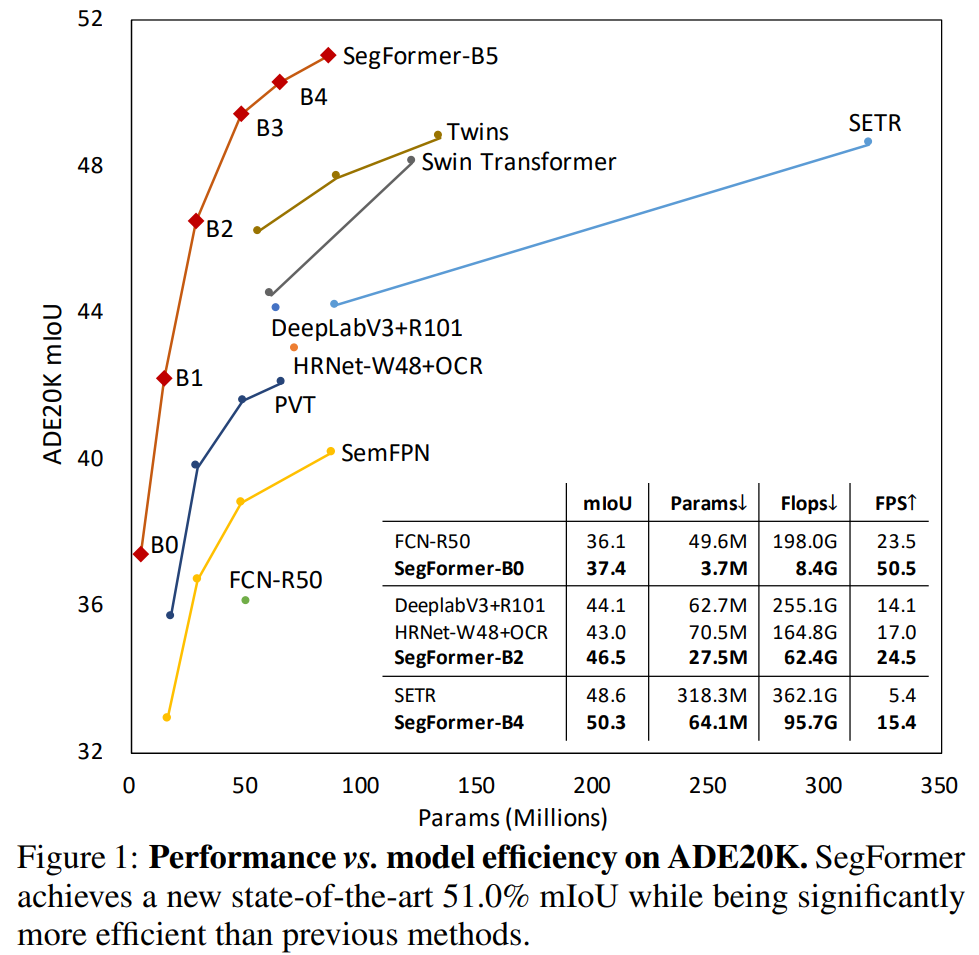

아래 figure를 보면 Ade20k 데이터셋에서 SegFormer 모델이 다른 모델에 비해 파라미터 수는 적고 mIOU는 월등히 높은 것을 볼 수 있습니다.

본 연구의 novelty 는 다음과 같습니다.

- Positional Encoding 이 필요없는 Hierarchical Transformer Encoder

- 복잡하고 계산량이 많은 모듈 없이도 파워풀한 representation을 제공하는 Lightweight All-MLP Decoder

- SegFormer 는 3가지 semantic segmentation benchmark dataset에서 efficiency, accuracy, robustness 부분에서 SoTA를 달성

Method

Figure 2 에 표시된 것처럼 SegFormer는 Hierarchical Transformer Encdoer 와 Lightweight All-MLP Decoder 로 구성됩니다. 크기가 H x W x 3 인 입력 이미지가 주어지면 먼저 4x4 크기의 패치로 나눕니다. 16x16 패치로 나누는 ViT 에 비해 세분화된 패치를 사용하면 segmentation 에 유리합니다. 이 패치들을 인코더의 입력으로 사용하여 원본 이미지의 해상도가 {1/4, 1/8, 1/16, 1/32} 인 multi-level feature 를 얻습니다. 그런 다음 multi-level feature 를 디코더에 전달하여 (H/4) x (W/4) x Ncls 해상도로 segmentation mask 를 예측합니다. (Ncls : 카테고리 수)

Hierarchical Transformer Encdoer

본 논문에서는 아키텍처는 동일하지만 크기가 다른 MiT(Mix Transformer Encoder) 시리즈 MiT-B0 ~ MiT-B5 를 설계합니다.

# Hierarchical Feature Representation

ViT 와 달리 SegFormer 의 인코더는 입력 이미지가 주어지면 multi-level multi-scale feature를 생성하고, 이러한 feature는 segmenation 성능을 향상시키는 고해상도의 coarse 한 feature와 저해상도의 fine-grained 한 feature 를 모두 제공합니다.

# Efficient Self-Attention

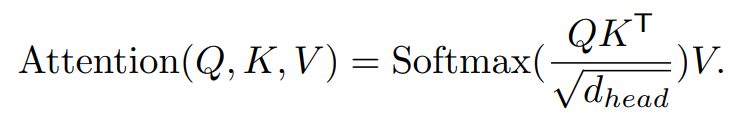

기존의 multi-head self-attention 에서 각 head Q, K, V 는 동일한 dimension NxC 를 가지며, 여기서 N = H x W 은 시퀀스의 길이이며 self-attention은 아래의 식을 따릅니다.

SegFormer의 인코더는 4x4 크기의 패치를 사용하기 때문에 ViT 에 비해 패치 수가 많아지고 self-attention 연산이 매우 복잡해집니다. 저자는 이를 개선하기 위해 sequence reduction process 를 사용합니다. 이는 reduction ratiro R 를 미리 지정하여 다음과 같이 시퀀스의 길이를 줄이는 방법입니다.

K 는 길이를 줄일 시퀀스이고, Reshape() 은 (N/R) x (C·R) 의 형태로 K를 reshape 하고, Linear() 는 Cin 채널 사이즈를 입력으로 Cout 채널 사이즈로 출력하는 linear layer 입니다. 따라서 새로운 K의 차원은 (N/R) x C 이고, 결과적으로 self-attention 메커니즘의 복잡성을 O(N^2) 에서 O(N^2/R) 로 줄어듭니다. 본 논문에서는 R을 stage-1에서 4까지 [64, 16, 4,1] 로 설정했습니다.

* 간단하게 생각하면 linear layer 로 채널 수를 줄인 다음에 multi-head self-attention을 수행한단 말인데, 굉장히 장황하게 설명되어 있는 것 같습니다.

# Overlapped Patch Merging

입력 이미지가 주어지면 ViT의 patch merging 프로세스는 N x N x 3 패치를 1 x 1 x C 벡터로 표현합니다. 그리고 이것은 2 x 2 x Ci 크기의 feature 를 1 x 1 x Ci+1 벡터로 통합하여 계층적인 feature map을 얻기 위해 확장할 수 있습니다. 즉, 아래 식처럼 F1 에서 F2 로 축소하고 다음 계층의 어떤 다른 feature map에 대해서도 반복할 수 있습니다.

그런데 이러한 프로세스는 처음에 겹치지 않는 이미지 또는 feature patch를 결합하도록 설계된 것이기 때문에 해당 패치 주변의 로컬 연속성을 유지하지 못합니다.

때문에 SegFormer 에서는 Overlapped Patch Merging 을 사용합니다. 이를 위해 K = 패치 크기, S = 인접한 두 패치 사이의 stride, P = padding 크기 를 정의하여 patch merging을 중첩되는 영역이 존재하도록 만듭니다. 실험에서는 overlapping patch merging 수행시 non-overlapping 프로세스와 동일한 사이즈의 feature를 생성하기 위해 K = 7, S = 4, P =3 및 K = 3, S = 2, P = 1 로 설정합니다.

정리하면 기존의 patch merging 방법에서는 패치 간의 overlap 되는 부분이 없었는데 이는 로컬 연속성을 유지하지 못하기 때문에 stride와 padding 사이즈를 정해서 패치를 overlap 시켜서 추출한다는 뜻입니다.

# Positional-Encoding-Free Design

ViT 에서 Positional Encoding (PE) 의 해상도는 고정되어 있기 때문에 테스트 해상도가 학습 시와 다른 경우 PE 를 interpolation 해야합니다. 이는 segmentation task 에서 해상도가 다른 경우가 많기 때문에 성능 저하로 이어집니다.

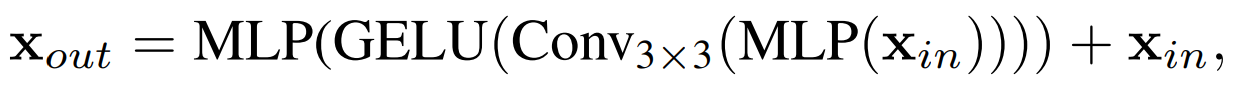

SegFormer 에서는 Feed Forward Network (FFN) 에서 3x3 Conv 를 직접 사용하여 leak location information에 대한 zero padding 에 대한 영향을 고려하는 Mix-FFN 을 제안합니다. 이는 다음과 같이 정의합니다.

xin 은 self-attention 모듈의 feature 이고 Mix-FFN 은 3x3 Conv 와 MLP 를 각 FFN에 혼합합니다. 본 연구에서 3x3 Conv 가 Transformer 에 대한 위치 정보를 제공하기에 충분하다는 것을 보여줍니다(실험 결과). 또한 효율성을 위해 depth-wise convolution을 사용합니다.

이와 같은 설계로 저자는 semantic segmentation 작업에서 feature map 에 PE 를 추가할 필요가 없다고 주장합니다.

Lightweight All-MLP Decoder

SegFormer 는 MLP 레이어로만 구성된 경량 디코더를 통합하며 이는 다른 방법에서 일반적으로 사용되는 수작업 및 계산 요구 사항 구성 요소를 필요로 하지 않습니다. 이러한 간단한 디코더를 설계하는 핵심은 계층적 트랜스포머 인코더가 기존 CNN 인코더보다 더 큰 Effective Receptive Field (ERF) 를 갖는다는 점입니다.

제안하는 ALL-MLP 디코더는 크게 아래와 같은 4 단계로 구성됩니다.

- MiT 인코더의 multi-level feature Fi 는 MLP 를 통해 동일한 채널 사이즈로 통합

- Feature 를 원본 이미지의 1/4 사이즈로 업샘플링하고 concat

- Concat 하여 4배 증가된 채널을 MLP 를 사용하여 원래 채널 사이즈로 변경

- Segmentation mask 를 예측

# Effective Receptive Field Analysis

Semantic Segmentation 에서는 context 정보를 포함하도록 큰 receptive field 를 유지하는 것은 핵심 문제입니다. 여기서 툴을 사용하여 MLP 디코더 설계가 트랜스포머에서 왜 그렇게 효과적인지 시각화하여 해석합니다. Figure 3 에서 DeepLabv3+ 와 SegFormer 에 대한 인코더 스테이지와 디코더 해드의 ERF 를 시각화 합니다.

위의 Figure 3 을 보면 DeepLabv3+ 의 ERF 는 가장 깊은 stage-4 에서도 상대적으로 작고, SegFormer 의 인코더는 하위 stage 에서 local attention을 자연스럽게 생성하는 동시에 stage-4 에서 context를 효과적으로 캡처하는 non-local attention 을 출력할 수 있습니다. 또한 줌인 패치에서 볼 수 있듯이 MLP 해드(파란색 상자) 의 ERF는 stage-4(빨간색 상자)와 다르며non-local attention 보다 local-attention이 더 강합니다.

CNN 의 제한된 receptive field 는 receptive field 를 확장하긴 하지만 필연적으로 무거워지는 ASPP 와 같은 context 모듈에 의존해야 합니다. 저자는 SegFormer 의 디코더 설계가 non-local attention 을 활용하고 복잡하지 않으면서 더 큰 receptive field를 가진다고 설명합니다. 또한 SegFormer 의 디코더 디자인은 본질적으로 highly local / non-local attention 을 모두 생성하는 트랜스포머의 이점을 취합니다.

Experiments

정리

SegFormer 는 Transformer 의 Encoder 와 Decoder 에서 아래와 같은 방법들을 사용하여 Semantic Segmentation 성능을 향상시키는 네트워크입니다.

본 논문에서 증명된 사실 중 Transformer 를 semantic segmentation 에 이용할 때 참고하면 좋은 내용들이 많아 숙지해두면 좋을 것 같습니다.

Hierarchical Transformer Encdoer : Semantic Segmentation 성능↑ + 연산 효율 ↑

- Hierarchical Feature Representation : Multi-scale/level Feature → Semantice Segmentation 성능 ↑

- Efficient Self-Attention : Sequence Reduction → 연산 효율성 ↑

- Overlapped Patch Merging : 로컬 연속성 유지 → Semantice Segmentation 성능 ↑

- Positional-Encoding-Free Design : PE 사용 X → 학습/테스트 데이터 해상도 다른 경우에도 Semantic Segmentation 성능 저하 x

- Lightweights All-MLP Decoder: 복잡한 계산 X → 연산 효율 ↑