반응형

- Information(정보량) : 불확실성을 제거하기 위해 필요한 질문의 수 또는 어떤 이벤트가 발생하기까지 필요한 시행의 수

- Entropy : 확률분포 P(x)에 대한 정보량의 기댓값, 불균형한 분포보다 균등한 분포의 경우 불확실성이 더 높기 때문에 엔트로피가 더 높음

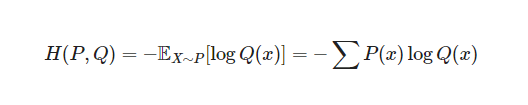

- Cross Entropy : 데이터의 확률 분포를 P(x), 모델이 추정하는 확률 분포를 Q(x)라 할때, 두 확률 분포 P와 Q의 차이를 측정하는 지표

- KL-divergence : 두 확률 분포 P, Q 가 있을 때, P를 근사하기 위한 Q 분포를 통해 샘플링할 때 발생하는 정보량의 손실 (Cross Entropy(P,Q) - Entropy(P))

이 때 머신러닝 모델의 목표는 확률 분포 P와 모델의 예측 확률 분포 Q의 차이인 KL divergence를 최소화하는 것이고, Entropy는 고정된 값이므로 Cross Entropy를 최소화하는 것이 목표가 됩니다.

Cross Entropy Loss

- Classification 문제에서 주로 cross entropy loss 를 사용

- True distribution P는 one-hot 인코딩된 vector를 사용(Ground Truth)

- Prediction distribution Q 는 모델의 예측 값으로 softmax layer를 거친 후의 값으로 클래스 별 확률 값을 모두 합치면 1

e.g.) P = [0, 1, 0], Q = [0.2, 0.7, 0.1] 일 때, cross entropy loss 결과는 아래와 같다.

Mean Squared Error (MSE) Loss

- 예측 값과 정답과의 차이를 제곱하여 평균을 낸 값

- 오차가 커질수록 제곱 연산으로 인해 값이 뚜렷해짐

- 연속적인 분포를 추정하는 regression 에서 주로 사용

Cross Entropy Loss vs. MSE Loss

- 데이터가 연속적인 분포인 gaussian 분포에 가까울 때(continuous) → MSE Loss

- 데이터가 categorical한 bernoulli 분포에 가까울 때(discrete) → Cross Entropy Loss

*확률 분포 관점에서 딥러닝 네트워크의 출력은 정해진 확률분포(가우시안, 베루누이,..)에서 출력이 나올 확률이다. 학습시키는 딥러닝 모델 f(x)의 역할은 확률분포의 모수를 추정하는 것이고, 계산된 loss는 추정된 분포에서 ground truth의 likelihood를 평가하는 것이다. Loss를 최소화하는 방향으로 딥러닝 파라미터를 업데이트하는 것은 likelihood를 최대화하는 것.

반응형

'📖 Fundamentals > AI & ML' 카테고리의 다른 글

| [AI/ML] CNN에서 Convolutional layer의 개념과 의미 | 컨볼루션 신경망 | 합성곱 신경망 (5) | 2023.03.23 |

|---|---|

| [AI/ML] 딥러닝 정규화 Regularization : Weight Decay, Batch Normalization, Early Stopping (0) | 2022.03.23 |

| [AI/ML] Classification과 Regression의 차이 (0) | 2022.03.23 |

| [AI/ML] Classification 성능 평가 방법 (0) | 2022.03.23 |

| [AI/ML] Bias와 Variance : 머신러닝 모델 평가 방법 (0) | 2022.03.22 |